Topic Modelling on Fact-Checked Stories

Tags:

This Python notebook builds a topic model on the headlines of 13,000+ fact-checking stories in the Tattle archive, and identifies 7 broad "topics" within India's misinformation landscape.

Steps 1 to 7 cover the text processing and topic modelling process, while steps 8 and 9 interpret and visualise the resulting topics with an interactive pyLDAvis plot. Step 10 is a standalone n-gram analysis exercise that highlights the top words and word-pairs in the headlines.

Why headlines?

The body text of fact-checking stories sometimes contains noisy strings which are not related to the misinformation being fact-checked. The headlines, however, are focused on the misinformation. (We repeated this analysis with the body text and found no significant difference apart from the noise)

Brief description of the model

Latent Dirichlet Allocation is an unsupervised, probabilistic model that generates topic-document and word-topic probability distributions from a collection of text documents. Topics are themes that occur in documents. Here, document = story headline.

Process

- Getting the data from the Tattle database

- Text cleaning (removing noise, English / non-English separation using regex)

- Translating non-English headlines and storing translations locally

- Pre-processing all the headlines (tokenizing, stop word removal, lemmatizing, creating bigrams)

- Text transformation: creating a corpus of vectors

- Selecting the number of topics

- Building the topic model

- Topic interpretation

- Interactive model visualisation

- N-gram analysis of the headlines

- Applications

1. Getting the data

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

# Importing libraries

import os

import requests

import time

from time import sleep

from random import uniform

import datetime

from datetime import date

import csv

from pymongo import MongoClient

from dotenv import load_dotenv

load_dotenv()

import os

from os import environ

import re

import numpy as np

import pandas as pd

from pprint import pprint

import nltk

from nltk.corpus import stopwords

import spacy

import gensim

import gensim.corpora as corpora

from gensim.corpora import Dictionary

from gensim.utils import simple_preprocess

from gensim.models import ldamodel

from gensim.models import CoherenceModel

import re

from langdetect import detect

from gensim.models.phrases import Phrases, Phraser

from nltk import FreqDist

from nltk.corpus import RegexpTokenizer as regextoken

import matplotlib

from matplotlib import pyplot as plt

%matplotlib inline

import logging

logging.basicConfig(filename='lda_model.log', format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

import googletrans

from googletrans import Translator

import pyLDAvis

import pyLDAvis.gensim

from gensim import similarities

# Get data from factchecking sites MongoDB

def initialize_mongo():

mongo_url = "mongodb+srv://os.environ.get("FACTCHECK_DB_USERNAME"):os.environ.get("FACTCHECK_DB_PASSWORD")@tattle-data-fkpmg.mongodb.net/test?retryWrites=true&w=majority&ssl=true&ssl_cert_reqs=CERT_NONE"

cli = MongoClient(mongo_url)

db = cli[os.environ.get("FACTCHECK_DB_NAME")]

coll = db[os.environ.get("FACTCHECK_DB_COLLECTION")]

if coll.count_documents({}) > 0:

return coll

else:

print("Error accessing Mongo collection")

sys.exit()

coll = initialize_mongo()

df = pd.DataFrame.from_records(coll.find({}))

df.to_csv("factchecking_stories.csv", index=False)

df = pd.read_csv("factchecking_stories.csv")

df = df.drop_duplicates()

# Snapshot of headlines

df["headline"][0:3]

0 Madhu Kishwar tweets photoshopped image of Amu...

1 Photoshopped promo of ABP News over Chandrayaa...

2 Video of scuffle between men in khaki uniform ...

Name: headline, dtype: object2. Text cleaning

The headlines contain some special accents which the regex pattern for non-English text is unable to catch, so we remove these before proceeding to separate the English and non-English headlines.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# Save headlines in a variable

raw_data = df["headline"].values.tolist()

# Defining a function to remove accented characters in the headlines

def data_dict(sentences):

return dict((sentence, ", ".join(simple_preprocess(str(sentence), deacc=True, max_len=100))) for sentence in sentences)

result = data_dict(raw_data)

# Separating non-English headlines using regex

pat = re.compile("[^\x00-\x7F]") # matches non-English characters

non_eng = [k for k,v in result.items() if pat.search(v)]

eng = [k for k,v in result.items() if not pat.search(v)]

print(len(eng), len(non_eng))

8298 52413. Translating non-English headlines

Googletrans is a free library that sends translation requests to the Google Translate API. Random time delays between requests are advised, else Google may (and probably will) block your ip address.

Translating the 5200+ non-English headlines took approximately 6.5 hours.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Translating non-English headlines using googletrans library

translator = Translator()

translations = []

for doc in non_eng:

translations.append(translator.translate(doc).text)

time.sleep(uniform(3,5))

# Saving the original and translated headlines for future reference

translated_headlines = dict(zip(non_eng, translations))

translations_df = pd.DataFrame(translated_headlines.items(), columns = ["headline", "translation"])

translations_df["original_english"] = 0

translations_df = translations_df.append(pd.DataFrame(eng, columns=['headline']), ignore_index=True, sort=True)

translations_df["original_english"].fillna(value=1, inplace = True)

translations_df["original_english"] = translations_df["original_english"].astype(int)

translations_df.to_csv("headlines_with_translations.csv")4. Text preprocessing

The text needs to be prepared for topic modelling by tokenizing it (splitting into individual lowercase words), removing stop words and common domain words like "fake" and "news", lemmatizing or reducing words to their root forms, and creating bigrams or pairs of words that frequently occur together in the text.

After some experimentation, we found that excluding all parts of speech except nouns and proper nouns during text preprocessing resulted in a more well-defined topics later.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# Combining the headlines

all_headlines = eng + translations

# Tokenizing the headlines

all_tokens = list(sent_to_words(all_headlines))

# Creating stop words list

stop_words = stopwords.words("english")

# Adding domain words

stop_words.extend(["fake", "fact", "check", "checked", "factcheck", "news", "false",

"falsely", "true", "truth", "viral", "video", "image", "picture",

"photo", "claim", "claiming", "share", "clip", "misleading"])

# Stop word removal

data_stopped = [[word for word in doc if word not in stop_words] for doc in all_tokens]

# Creating bigrams

bigram = gensim.models.Phrases(data_stopped, min_count=10)

for idx in range(len(data_stopped)):

for token in bigram[data_stopped[idx]]:

if '_' in token:

# If token is bigram, add it to document

data_stopped[idx].append(token)

data_with_bigrams = data_stopped

# Lemmatizing i.e. reducing words to their root form

# Including only nouns as this improves both topic interpretability and coherence scores

def lemmatization(docs, allowed_postags=["NOUN", "PROPN"]):

nlp = spacy.load("en_core_web_sm")

docs_out = []

for sent in docs:

doc = nlp(" ".join(sent))

docs_out.append([token.lemma_ for token in doc if token.pos_ in allowed_postags])

return docs_out

data_lemmatized = lemmatization(data_with_bigrams, allowed_postags=["NOUN", "PROPN"])

# Removing any stopwords created because of lemmatization

data_cleaned = [[word for word in doc if word not in stop_words] for doc in data_lemmatized]5. Text transformation: creating a corpus of vectors

Topic modelling with the Gensim library involves certain concepts like documents, corpus, vectors and bag of words. These are explained here - https://radimrehurek.com/gensim/auto_examples/core/run_core_concepts.html

1

2

3

4

5

6

7

8

9

10

# Creating a dictionary

id2word = corpora.Dictionary(data_cleaned)

# Creating a document-term matrix

print('Number of unique tokens: %d' % len(id2word))

id2word.filter_extremes(no_below = 20)

# Creating a document-term matrix

corpus = [id2word.doc2bow(doc) for doc in data_cleaned]

Number of unique tokens: 75856. Selecting the number of topics

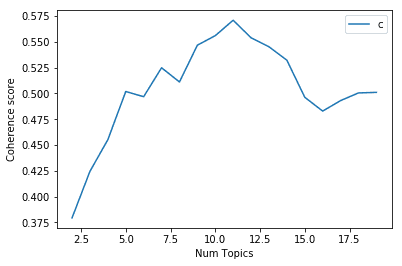

Topic coherence is an evaluation metric for topic models that measures the degree of semantic similarity between high scoring words in the topic. The graph below helps find an appropriate 'k' number of topics to model.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

def compute_coherence_values(dictionary, corpus, texts, limit, start=2, step=3):

"""

Compute c_v coherence for various number of topics

Parameters:

----------

dictionary : Gensim dictionary

corpus : Gensim corpus

texts : List of input texts

limit : Max num of topics

Returns:

-------

model_list : List of LDA topic models

coherence_values : Coherence values corresponding to the LDA model with respective number of topics

"""

coherence_values = []

model_list = []

for num_topics in range(start, limit, step):

model=ldamodel.LdaModel(corpus=corpus, id2word=dictionary, num_topics=num_topics, passes=5, per_word_topics = True, chunksize=100, alpha='auto',

eta='auto', eval_every=1, random_state = 0)

model_list.append(model)

coherencemodel = CoherenceModel(model=model, texts=texts, dictionary=dictionary, coherence='c_v')

coherence_values.append(coherencemodel.get_coherence())

return model_list, coherence_values

model_list, coherence_values = compute_coherence_values(dictionary=id2word, corpus=corpus, texts=data_cleaned, start=2, limit=20, step=1)

# Show graph

limit=20; start=2; step=1;

x = range(start, limit, step)

plt.plot(x, coherence_values)

plt.xlabel("Num Topics")

plt.ylabel("Coherence score")

plt.legend(("coherence_values"), loc='best')

plt.show()7. Building the topic model

We choose k=7 as k=11 produces the same number of meaningful topics (and four not-so-meaningful ones). This 'k' is supplied as a hyperparameter to the model. The model returns topics (clusters of words) along with their probabilities of belonging to that topic / cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

lda_model = ldamodel.LdaModel(corpus = corpus, num_topics = 7, id2word = id2word, chunksize=100, alpha='auto',

eta='auto', passes = 5, random_state = 0)

# Printing the topic-word probabilities

pprint(lda_model.print_topics())

[(0,

'0.094*"bjp" + 0.057*"people" + 0.057*"medium" + 0.044*"modi" + '

'0.038*"social_media" + 0.036*"temple" + 0.032*"verification" + '

'0.030*"state" + 0.027*"stock" + 0.026*"statement"'),

(1,

'0.123*"virus" + 0.066*"box" + 0.064*"corona_virus" + 0.056*"year" + '

'0.041*"coronavirus" + 0.040*"student" + 0.039*"coro" + 0.035*"china" + '

'0.032*"message" + 0.031*"girl"'),

(2,

'0.101*"india" + 0.047*"post" + 0.041*"muslim" + 0.041*"country" + '

'0.041*"lockdown" + 0.036*"rally" + 0.033*"riot" + 0.030*"president" + '

'0.027*"house" + 0.025*"shah"'),

(3,

'0.140*"police" + 0.089*"woman" + 0.081*"name" + 0.049*"man" + 0.049*"child" '

'+ 0.038*"leader" + 0.028*"worker" + 0.027*"rumor" + 0.026*"khan" + '

'0.025*"death"'),

(4,

'0.090*"gandhi" + 0.069*"minister" + 0.041*"election" + 0.041*"event" + '

'0.040*"rahul_gandhi" + 0.035*"prime_minister" + 0.034*"color" + '

'0.032*"body" + 0.028*"vote" + 0.026*"communal_color"'),

(5,

'0.058*"rape" + 0.051*"time" + 0.037*"army" + 0.036*"evidence" + '

'0.036*"food" + 0.030*"mock" + 0.030*"mock_drill" + 0.028*"drill" + '

'0.028*"day" + 0.026*"footage"'),

(6,

'0.091*"delhi" + 0.081*"caa" + 0.055*"protest" + 0.038*"government" + '

'0.038*"person" + 0.035*"attack" + 0.032*"congress" + 0.030*"kashmir" + '

'0.030*"money" + 0.028*"pradesh"')]8. Topic interpretation

The topic-word probability distributions above can be interpreted as 7 topics or themes that describe the story headlines. Assigning labels or "meaning" to the topics is a subjective process that requires human judgement. Here is one possible set of interpretations:

- BJP, Modi & the media

- Coronavirus

- General national politics

- Identity / people

- Rahul Gandhi, Congress & national politics

- Law enforcement & crime

- Citizenship act protests & different states

9. Interactive model visualisation

The pyLDAvis plot below presents the results of the topic model in a more interpretable form. It provides a global view of the topics (and how they differ from each other), while at the same time allowing for a deep inspection of the terms most highly associated with each individual topic. Hovering over a topic "bubble" highlights the associated terms in red on the right. A bubble's size is proportional to the relative prevalence of that topic in the corpus.

To read more about the plot, check out this paper by the creators - https://nlp.stanford.edu/events/illvi2014/papers/sievert-illvi2014.pdf

1

2

3

pyLDAvis.enable_notebook()

vis = pyLDAvis.gensim.prepare(lda_model3, corpus, id2word, sort_topics=True, n_jobs=1)

vispath { fill: none; stroke: none; }

.xaxis .tick.major { fill: black; stroke: black; stroke-width: 0.1; opacity: 0.7; }

.slideraxis { fill: black; stroke: black; stroke-width: 0.4; opacity: 1; }

text { font-family: sans-serif; font-size: 11px; }

var ldavis_el202961124743444486412651638_data = {"mdsDat": {"x": [-0.09680300942218958, 0.32185953256715333, 0.11843308216375723, -0.15786855794287866, -0.26101340209211904, 0.0850534551939429, -0.00966110046766587], "y": [-0.09452513706467737, -0.2012867131444191, 0.4157678364906971, -0.04893402423062434, -0.005078895192067473, -0.03476575266682092, -0.03117731419208802], "topics": [1, 2, 3, 4, 5, 6, 7], "cluster": [1, 1, 1, 1, 1, 1, 1], "Freq": [18.613168716430664, 17.317359924316406, 15.620244979858398, 13.766229629516602, 13.321460723876953, 11.680825233459473, 9.680708885192871]}, "tinfo": {"Category": ["Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Default", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic1", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic2", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic3", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic4", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic5", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic6", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7", "Topic7"], "Freq": [869.0, 649.0, 699.0, 553.0, 625.0, 553.0, 556.0, 500.0, 419.0, 352.0, 421.0, 419.0, 322.0, 377.0, 349.0, 298.0, 305.0, 304.0, 328.0, 225.0, 257.0, 282.0, 196.0, 264.0, 260.0, 226.0, 225.0, 266.0, 224.0, 219.0, 698.1888427734375, 420.4351501464844, 418.802734375, 327.71746826171875, 281.5549621582031, 265.2623596191406, 235.1444549560547, 220.0459747314453, 202.8692626953125, 191.0956268310547, 135.85748291015625, 135.01585388183594, 136.69847106933594, 129.0937957763672, 124.88054656982422, 120.52294921875, 119.0954818725586, 116.5804443359375, 112.864013671875, 107.10236358642578, 104.70463562011719, 99.41806030273438, 97.26445770263672, 93.32624053955078, 93.30060577392578, 83.1642837524414, 181.0066375732422, 69.490478515625, 67.1014404296875, 65.4608154296875, 625.0946655273438, 555.8406982421875, 376.2816467285156, 263.2202453613281, 259.930908203125, 243.2035369873047, 221.85943603515625, 207.60145568847656, 206.54234313964844, 195.5810546875, 195.51231384277344, 133.77444458007812, 111.54082489013672, 110.03955841064453, 107.89057159423828, 106.0445556640625, 93.55343627929688, 93.88829803466797, 93.27379608154297, 91.25531768798828, 89.2413330078125, 87.28921508789062, 85.11912536621094, 80.21784210205078, 81.28910064697266, 72.69586944580078, 70.70909118652344, 66.2198486328125, 65.4062728881836, 62.69060516357422, 868.5487670898438, 553.125, 500.0487365722656, 304.93408203125, 303.4142761230469, 236.53709411621094, 173.28500366210938, 168.42922973632812, 158.7606201171875, 156.51063537597656, 144.787353515625, 141.49212646484375, 121.9958267211914, 129.643798828125, 113.71488189697266, 89.0584716796875, 87.64140319824219, 86.76089477539062, 86.32020568847656, 83.45951843261719, 82.2347412109375, 73.63748931884766, 63.09907150268555, 62.63433074951172, 62.41517639160156, 62.21247100830078, 59.42239761352539, 55.900447845458984, 55.63032913208008, 54.5949821472168, 64.76901245117188, 552.494140625, 256.1957702636719, 225.27142333984375, 224.23793029785156, 223.42628479003906, 197.25868225097656, 181.51266479492188, 164.8415069580078, 148.24501037597656, 137.99261474609375, 134.9283905029297, 121.62744903564453, 114.57527923583984, 102.40070343017578, 93.74873352050781, 85.37808227539062, 78.57308959960938, 77.18280029296875, 74.27439880371094, 67.80770111083984, 66.54544067382812, 64.79822540283203, 63.01530456542969, 65.57853698730469, 62.645870208740234, 62.537567138671875, 58.452701568603516, 57.79158020019531, 57.39969253540039, 56.26670455932617, 56.40860366821289, 648.9902954101562, 351.6218566894531, 297.7640686035156, 218.88925170898438, 213.49400329589844, 186.65948486328125, 170.66346740722656, 162.22398376464844, 141.8873291015625, 120.79631042480469, 105.19546508789062, 101.1507568359375, 88.93756103515625, 79.92227172851562, 78.6008529663086, 71.391845703125, 68.32215881347656, 63.47147750854492, 62.00764846801758, 61.1439208984375, 59.746360778808594, 53.95633316040039, 48.84767532348633, 44.844730377197266, 42.9484977722168, 42.07223892211914, 41.679683685302734, 39.82577896118164, 38.40555953979492, 37.53164291381836, 337.55059814453125, 92.82905578613281, 204.43731689453125, 418.5194091796875, 321.3217468261719, 191.11068725585938, 190.9911651611328, 187.15335083007812, 162.88998413085938, 159.6423797607422, 146.31044006347656, 127.73458862304688, 119.6568603515625, 106.12444305419922, 104.4969482421875, 103.39991760253906, 99.70548248291016, 89.09049224853516, 86.20008850097656, 79.10782623291016, 77.80997467041016, 70.45279693603516, 69.21394348144531, 68.08279418945312, 66.83040618896484, 65.0103988647461, 63.02021026611328, 62.19595718383789, 60.84129333496094, 61.61081314086914, 57.69501495361328, 57.331031799316406, 55.398773193359375, 224.80142211914062, 195.8890838623047, 140.46389770507812, 136.7405242919922, 136.78756713867188, 116.53118896484375, 115.92755126953125, 107.50430297851562, 106.13885498046875, 100.69060516357422, 92.29374694824219, 85.37361145019531, 83.64075469970703, 80.4362564086914, 78.80231475830078, 75.74420166015625, 70.26490020751953, 63.24070739746094, 63.0159912109375, 60.76298141479492, 58.36465072631836, 57.90799331665039, 57.38543701171875, 56.2412223815918, 55.59008026123047, 55.048561096191406, 54.27850341796875, 54.12855529785156, 52.50811004638672, 44.134986877441406], "Term": ["police", "virus", "bjp", "india", "delhi", "woman", "caa", "name", "gandhi", "box", "people", "medium", "minister", "protest", "corona_virus", "year", "man", "child", "modi", "rape", "post", "social_media", "time", "government", "person", "muslim", "country", "temple", "lockdown", "coronavirus", "bjp", "people", "medium", "modi", "social_media", "temple", "verification", "state", "stock", "statement", "protester", "family", "citizenship", "kejriwal", "photoshop", "old_photo", "newspaper", "tweet", "old_photos", "islam", "support", "report", "arvind_kejriwal", "story", "poster", "list", "data_verification", "bird", "number", "bottle", "delhi", "caa", "protest", "government", "person", "attack", "congress", "kashmir", "money", "pradesh", "flag", "performance", "case", "incident", "bagh", "caa_protest", "fire", "shaheen_bagh", "speech", "bangladesh", "mla", "film", "context", "airport", "italy", "youth", "boy", "uttar", "husband", "drug", "police", "woman", "name", "man", "child", "leader", "worker", "rumor", "khan", "death", "party", "violence", "assam", "vaccine", "note", "angle", "hindus", "bihar", "rss", "victim", "bjp_leader", "muslim_man", "delhi_police", "mob", "mosque", "yogi", "company", "baton", "nrc", "communal", "czech", "india", "post", "muslim", "country", "lockdown", "rally", "riot", "president", "house", "shah", "home", "tv", "datum", "infection", "law", "hand", "stone", "sri", "amit_shah", "kolkata", "gujarat", "doctor", "water", "delhi_riot", "show", "anil", "sharing", "amit", "hotel", "train", "opposition", "virus", "box", "year", "coronavirus", "student", "china", "message", "girl", "road", "jamia", "information", "jnu", "patient", "bank", "member", "way", "health", "ram", "mumbai", "hospital", "world", "ministry", "year_old", "community", "disease", "mother", "wrong", "injury", "policeman", "order", "corona_virus", "pack", "coro", "gandhi", "minister", "election", "event", "rahul_gandhi", "prime_minister", "color", "body", "vote", "communal_color", "campaign", "comment", "photograph", "wife", "suicide", "sonia", "foot", "murder", "bill", "kill", "scene", "priyanka", "part", "baby", "movie", "group", "cctv", "trump", "chief_minister", "daughter", "rape", "time", "army", "food", "evidence", "mock", "mock_drill", "drill", "day", "footage", "slogan", "soldier", "maharashtra", "crore", "indian_army", "nothing", "bomb", "kumar", "game", "run", "air", "lion", "strike", "april", "celebration", "account", "force", "republic", "nehru", "facebook"], "Total": [869.0, 649.0, 699.0, 553.0, 625.0, 553.0, 556.0, 500.0, 419.0, 352.0, 421.0, 419.0, 322.0, 377.0, 349.0, 298.0, 305.0, 304.0, 328.0, 225.0, 257.0, 282.0, 196.0, 264.0, 260.0, 226.0, 225.0, 266.0, 224.0, 219.0, 699.0114135742188, 421.2584228515625, 419.6248779296875, 328.5393371582031, 282.3765869140625, 266.0848693847656, 235.96768188476562, 220.86883544921875, 203.69338989257812, 191.91737365722656, 136.680908203125, 135.83935546875, 137.56385803222656, 129.91558837890625, 125.70299530029297, 121.34651947021484, 119.91887664794922, 117.40215301513672, 113.68909454345703, 107.92507934570312, 105.5280532836914, 100.24089813232422, 98.08659362792969, 94.14916229248047, 94.12367248535156, 83.98799896240234, 183.06121826171875, 70.31416320800781, 67.92338562011719, 66.28515625, 625.9370727539062, 556.6829833984375, 377.12188720703125, 264.060791015625, 260.7713317871094, 244.04348754882812, 222.69894409179688, 208.4410858154297, 207.38453674316406, 196.4205322265625, 196.35227966308594, 134.61846923828125, 112.3813705444336, 110.8804702758789, 108.7342300415039, 106.8855972290039, 94.39337158203125, 94.73233032226562, 94.11436462402344, 92.09552764892578, 90.08232116699219, 88.12916564941406, 85.95897674560547, 81.0600814819336, 82.18518829345703, 73.53640747070312, 71.55030059814453, 67.05945587158203, 66.24886322021484, 63.53553009033203, 869.3876953125, 553.9635620117188, 500.8872985839844, 305.7728271484375, 304.25244140625, 237.3752899169922, 174.12452697753906, 169.2717742919922, 159.59930419921875, 157.3491973876953, 145.6258544921875, 142.33108520507812, 122.83491516113281, 130.56130981445312, 114.552978515625, 89.90327453613281, 88.48147583007812, 87.59938049316406, 87.15855407714844, 84.29923248291016, 83.07377624511719, 74.48355865478516, 63.937965393066406, 63.47373962402344, 63.25409698486328, 63.051204681396484, 60.262298583984375, 56.739471435546875, 56.48041915893555, 55.43473434448242, 66.22378540039062, 553.3424072265625, 257.0435791015625, 226.12002563476562, 225.08753967285156, 224.28152465820312, 198.10768127441406, 182.3640594482422, 165.69081115722656, 149.0937957763672, 138.84033203125, 135.77833557128906, 122.4765625, 115.42652893066406, 103.26197052001953, 94.59849548339844, 86.2278823852539, 79.42214965820312, 78.03015899658203, 75.12187194824219, 68.6571044921875, 67.39350128173828, 65.64910888671875, 63.86391830444336, 66.46525573730469, 63.49372100830078, 63.38623809814453, 59.301876068115234, 58.639251708984375, 58.2495231628418, 57.11502456665039, 57.26002883911133, 649.8746948242188, 352.51190185546875, 298.6455993652344, 219.77549743652344, 214.3758544921875, 187.54273986816406, 171.54542541503906, 163.1055908203125, 142.7705841064453, 121.69551849365234, 106.07762908935547, 102.0323257446289, 89.82001495361328, 80.8037338256836, 79.48382568359375, 72.2745590209961, 69.20529174804688, 64.35344696044922, 62.88945770263672, 62.026641845703125, 60.627655029296875, 54.84024429321289, 49.72892379760742, 45.72787857055664, 43.83144760131836, 42.954750061035156, 42.56513595581055, 40.724674224853516, 39.28815841674805, 38.41437530517578, 349.150390625, 95.81779479980469, 248.78604125976562, 419.36004638671875, 322.162841796875, 191.9521026611328, 191.8330841064453, 187.99388122558594, 163.73068237304688, 160.4908447265625, 147.15420532226562, 128.5757598876953, 120.50839233398438, 106.96698760986328, 105.33956909179688, 104.24146270751953, 100.546875, 89.93275451660156, 87.04151153564453, 79.95301818847656, 78.65145874023438, 71.2977294921875, 70.05838775634766, 68.92508697509766, 67.67119598388672, 65.85277557373047, 63.86159896850586, 63.03912353515625, 61.6854362487793, 62.467041015625, 58.53699493408203, 58.17249298095703, 56.24005126953125, 225.6089630126953, 196.69723510742188, 141.2703399658203, 137.54904174804688, 137.59739685058594, 117.33794403076172, 116.73429107666016, 108.3111801147461, 106.94602966308594, 101.5001220703125, 93.10082244873047, 86.18026733398438, 84.44786071777344, 81.24366760253906, 79.60884094238281, 76.55236053466797, 71.07340240478516, 64.0482177734375, 63.82326126098633, 61.57375717163086, 59.17106628417969, 58.71742630004883, 58.192352294921875, 57.050132751464844, 56.398826599121094, 55.8550910949707, 55.0851936340332, 54.93600082397461, 53.31494140625, 44.941593170166016], "loglift": [30.0, 29.0, 28.0, 27.0, 26.0, 25.0, 24.0, 23.0, 22.0, 21.0, 20.0, 19.0, 18.0, 17.0, 16.0, 15.0, 14.0, 13.0, 12.0, 11.0, 10.0, 9.0, 8.0, 7.0, 6.0, 5.0, 4.0, 3.0, 2.0, 1.0, 1.6800999641418457, 1.6792999505996704, 1.6792999505996704, 1.6787999868392944, 1.6784000396728516, 1.6782000064849854, 1.6778000593185425, 1.6776000261306763, 1.6771999597549438, 1.6770000457763672, 1.6753000020980835, 1.6751999855041504, 1.6749999523162842, 1.6749999523162842, 1.6747000217437744, 1.6744999885559082, 1.674399971961975, 1.674299955368042, 1.6740000247955322, 1.6735999584197998, 1.6734999418258667, 1.6730999946594238, 1.6728999614715576, 1.6725000143051147, 1.6725000143051147, 1.6713999509811401, 1.6699999570846558, 1.6694999933242798, 1.669100046157837, 1.6687999963760376, 1.7520999908447266, 1.7518999576568604, 1.7511999607086182, 1.7503000497817993, 1.7502000331878662, 1.75, 1.7496999502182007, 1.749400019645691, 1.749400019645691, 1.7491999864578247, 1.7491999864578247, 1.7472000122070312, 1.746000051498413, 1.7458000183105469, 1.7457000017166138, 1.7455999851226807, 1.7445000410079956, 1.7445000410079956, 1.7445000410079956, 1.7443000078201294, 1.7440999746322632, 1.743899941444397, 1.7436000108718872, 1.7430000305175781, 1.7424999475479126, 1.7419999837875366, 1.7416000366210938, 1.7409000396728516, 1.7407000064849854, 1.7401000261306763, 1.8555999994277954, 1.8551000356674194, 1.8549000024795532, 1.8538999557495117, 1.8538000583648682, 1.8530999422073364, 1.8517999649047852, 1.8516000509262085, 1.8513000011444092, 1.8513000011444092, 1.8508000373840332, 1.8507000207901, 1.8496999740600586, 1.8495999574661255, 1.8493000268936157, 1.8472000360488892, 1.847100019454956, 1.847000002861023, 1.8468999862670898, 1.84660005569458, 1.846500039100647, 1.8451999425888062, 1.843400001525879, 1.8432999849319458, 1.8432999849319458, 1.8431999683380127, 1.8425999879837036, 1.8416999578475952, 1.8414000272750854, 1.8413000106811523, 1.8344000577926636, 1.9814000129699707, 1.979599952697754, 1.979200005531311, 1.979200005531311, 1.979099988937378, 1.978700041770935, 1.9782999753952026, 1.9778000116348267, 1.9772000312805176, 1.9767999649047852, 1.976699948310852, 1.9759999513626099, 1.9754999876022339, 1.9745999574661255, 1.9738999605178833, 1.9730000495910645, 1.9722000360488892, 1.972000002861023, 1.97160005569458, 1.9704999923706055, 1.9702999591827393, 1.9699000120162964, 1.969599962234497, 1.969499945640564, 1.969499945640564, 1.969499945640564, 1.968500018119812, 1.968400001525879, 1.9682999849319458, 1.968000054359436, 1.968000054359436, 2.014400005340576, 2.0132999420166016, 2.0127999782562256, 2.0118000507354736, 2.011699914932251, 2.0111000537872314, 2.0106000900268555, 2.0104000568389893, 2.0095999240875244, 2.0083999633789062, 2.0074000358581543, 2.0071001052856445, 2.0058999061584473, 2.0048000812530518, 2.0046000480651855, 2.003499984741211, 2.003000020980835, 2.002000093460083, 2.001699924468994, 2.001499891281128, 2.001199960708618, 1.999500036239624, 1.9979000091552734, 1.9962999820709229, 1.9953999519348145, 1.9950000047683716, 1.9947999715805054, 1.993499994277954, 1.9931000471115112, 1.9924999475479126, 1.9819999933242798, 1.9840999841690063, 1.8194999694824219, 2.14520001411438, 2.1445999145507812, 2.1428000926971436, 2.1428000926971436, 2.142699956893921, 2.1421000957489014, 2.141900062561035, 2.1414999961853027, 2.140700101852417, 2.1401000022888184, 2.1393001079559326, 2.13919997215271, 2.1391000747680664, 2.1387999057769775, 2.1377999782562256, 2.137500047683716, 2.1366000175476074, 2.1364998817443848, 2.1352999210357666, 2.1350998878479004, 2.1349000930786133, 2.134700059890747, 2.1342999935150146, 2.134000062942505, 2.1338000297546387, 2.1333999633789062, 2.1333999633789062, 2.132699966430664, 2.132699966430664, 2.132200002670288, 2.331399917602539, 2.330899953842163, 2.3292999267578125, 2.3290998935699463, 2.3290998935699463, 2.3280999660491943, 2.3280999660491943, 2.3276000022888184, 2.327500104904175, 2.3269999027252197, 2.3262999057769775, 2.3255999088287354, 2.3254001140594482, 2.325000047683716, 2.324899911880493, 2.324399948120117, 2.3236000537872314, 2.3222999572753906, 2.3222999572753906, 2.3217999935150146, 2.3213000297546387, 2.321199893951416, 2.3210999965667725, 2.3208000659942627, 2.3206000328063965, 2.320499897003174, 2.3203001022338867, 2.320199966430664, 2.3197999000549316, 2.3169000148773193], "logprob": [30.0, 29.0, 28.0, 27.0, 26.0, 25.0, 24.0, 23.0, 22.0, 21.0, 20.0, 19.0, 18.0, 17.0, 16.0, 15.0, 14.0, 13.0, 12.0, 11.0, 10.0, 9.0, 8.0, 7.0, 6.0, 5.0, 4.0, 3.0, 2.0, 1.0, -2.360100030899048, -2.867300033569336, -2.8712000846862793, -3.1164000034332275, -3.268199920654297, -3.3278000354766846, -3.4484000205993652, -3.514699935913086, -3.5959999561309814, -3.6558001041412354, -3.996999979019165, -4.003200054168701, -3.990799903869629, -4.047999858856201, -4.081200122833252, -4.116700172424316, -4.128600120544434, -4.150000095367432, -4.182400226593018, -4.234799861907959, -4.257400035858154, -4.309199810028076, -4.331099987030029, -4.372499942779541, -4.372700214385986, -4.487800121307373, -3.7100000381469727, -4.667399883270264, -4.702400207519531, -4.727099895477295, -2.3984999656677246, -2.515899896621704, -2.906100034713745, -3.263400077819824, -3.2760000228881836, -3.3424999713897705, -3.4344000816345215, -3.5007998943328857, -3.5058999061584473, -3.5604000091552734, -3.560800075531006, -3.9402999877929688, -4.122000217437744, -4.1356000900268555, -4.155300140380859, -4.172500133514404, -4.297900199890137, -4.294300079345703, -4.300899982452393, -4.322700023651123, -4.345099925994873, -4.367199897766113, -4.392399787902832, -4.451700210571289, -4.438399791717529, -4.550099849700928, -4.5777997970581055, -4.643400192260742, -4.655799865722656, -4.698200225830078, -1.9664000272750854, -2.4177000522613525, -2.5185999870300293, -3.013200044631958, -3.018199920654297, -3.267199993133545, -3.5782999992370605, -3.606800079345703, -3.6658999919891357, -3.6800999641418457, -3.757999897003174, -3.7809998989105225, -3.92930006980896, -3.868499994277954, -3.9995999336242676, -4.24399995803833, -4.260000228881836, -4.270100116729736, -4.275199890136719, -4.308899879455566, -4.323699951171875, -4.434100151062012, -4.588600158691406, -4.5960001945495605, -4.5995001792907715, -4.602700233459473, -4.648600101470947, -4.709700107574463, -4.7144999504089355, -4.73330020904541, -4.562399864196777, -2.2925000190734863, -3.061000108718872, -3.1895999908447266, -3.194200038909912, -3.1977999210357666, -3.3224000930786133, -3.405600070953369, -3.5018999576568604, -3.608099937438965, -3.6796998977661133, -3.702199935913086, -3.805999994277954, -3.8657000064849854, -3.9779999256134033, -4.066299915313721, -4.159800052642822, -4.2428998947143555, -4.260700225830078, -4.299200057983398, -4.390200138092041, -4.408999919891357, -4.4355998039245605, -4.463500022888184, -4.423699855804443, -4.469399929046631, -4.471199989318848, -4.538700103759766, -4.550099849700928, -4.5569000244140625, -4.5767998695373535, -4.5742998123168945, -2.0987000465393066, -2.7114999294281006, -2.8777999877929688, -3.185499906539917, -3.2105000019073486, -3.3447999954223633, -3.4344000816345215, -3.485100030899048, -3.61899995803833, -3.7799999713897705, -3.918299913406372, -3.9574999809265137, -4.086100101470947, -4.192999839782715, -4.209700107574463, -4.3059000968933105, -4.349800109863281, -4.423500061035156, -4.446800231933594, -4.4608001708984375, -4.484000205993652, -4.585899829864502, -4.685400009155273, -4.770899772644043, -4.8140997886657715, -4.834700107574463, -4.844099998474121, -4.889599800109863, -4.925899982452393, -4.94890022277832, -2.7523999214172363, -4.043300151824951, -3.2537999153137207, -2.405900001525879, -2.6702001094818115, -3.1898000240325928, -3.1903998851776123, -3.210700035095215, -3.349600076675415, -3.3696999549865723, -3.456899881362915, -3.5927000045776367, -3.6579999923706055, -3.7780001163482666, -3.7934999465942383, -3.803999900817871, -3.840399980545044, -3.953000068664551, -3.9860000610351562, -4.071800231933594, -4.088399887084961, -4.187699794769287, -4.205399990081787, -4.22189998626709, -4.240499973297119, -4.268099784851074, -4.299200057983398, -4.312399864196777, -4.334400177001953, -4.321800231933594, -4.387499809265137, -4.393799781799316, -4.428100109100342, -2.839600086212158, -2.977299928665161, -3.3099000453948975, -3.336699962615967, -3.336400032043457, -3.4967000484466553, -3.5018999576568604, -3.5773000717163086, -3.590100049972534, -3.6428000926971436, -3.7298998832702637, -3.807800054550171, -3.8282999992370605, -3.8673999309539795, -3.8879001140594482, -3.927500009536743, -4.002600193023682, -4.107900142669678, -4.111400127410889, -4.147900104522705, -4.1880998611450195, -4.196000099182129, -4.204999923706055, -4.225200176239014, -4.236800193786621, -4.246600151062012, -4.260700225830078, -4.263500213623047, -4.293900012969971, -4.467599868774414]}, "token.table": {"Topic": [7, 7, 2, 4, 4, 3, 4, 7, 7, 1, 3, 2, 6, 2, 2, 5, 3, 3, 6, 1, 1, 3, 6, 7, 1, 5, 2, 2, 2, 6, 2, 6, 7, 6, 3, 5, 1, 6, 6, 3, 6, 5, 3, 2, 2, 1, 2, 3, 4, 5, 6, 7, 1, 2, 3, 4, 5, 6, 7, 5, 4, 7, 3, 6, 1, 6, 4, 6, 7, 3, 2, 3, 4, 5, 4, 7, 2, 6, 6, 7, 7, 1, 2, 2, 2, 7, 6, 7, 7, 7, 6, 5, 2, 6, 4, 4, 5, 3, 4, 5, 4, 4, 2, 2, 4, 7, 4, 5, 5, 1, 2, 5, 5, 2, 1, 3, 6, 4, 7, 4, 3, 7, 1, 4, 7, 3, 1, 5, 5, 6, 5, 2, 3, 7, 7, 1, 2, 3, 5, 6, 5, 6, 4, 3, 3, 7, 1, 3, 7, 3, 1, 1, 1, 4, 5, 1, 3, 5, 6, 3, 5, 1, 2, 2, 6, 1, 3, 5, 4, 1, 2, 4, 6, 6, 2, 1, 6, 4, 5, 7, 1, 7, 4, 5, 3, 3, 7, 6, 4, 2, 4, 4, 7, 1, 7, 6, 2, 4, 1, 1, 1, 4, 1, 7, 5, 6, 1, 1, 7, 4, 6, 4, 1, 2, 3, 1, 3, 3, 5, 6, 4, 5, 6, 3, 3, 5, 5, 5, 5, 3, 2], "Freq": [0.9846909046173096, 0.9802088141441345, 0.9869222640991211, 0.9890985488891602, 0.9850659966468811, 0.989952802658081, 0.9939066171646118, 0.9815928339958191, 0.9910077452659607, 0.988922119140625, 0.9932029247283936, 0.9957242012023926, 0.9865083694458008, 0.9932475090026855, 0.9881044626235962, 0.9900532364845276, 0.9869672656059265, 0.993157684803009, 0.9817984700202942, 0.9813101291656494, 0.9985530972480774, 0.9870744347572327, 0.9921565055847168, 0.984897255897522, 0.9806116819381714, 0.9985478520393372, 0.9923089146614075, 0.9987730979919434, 0.9917145371437073, 0.9909599423408508, 0.9966064691543579, 0.9925233721733093, 0.9929284453392029, 0.9798445701599121, 0.9958835244178772, 0.9971060752868652, 0.9959011077880859, 0.9969416260719299, 0.9872833490371704, 0.9921576976776123, 0.9957812428474426, 0.9840824007987976, 0.979053258895874, 0.996861457824707, 0.9888437986373901, 0.02813662588596344, 0.02009759098291397, 0.024117108434438705, 0.052253734320402145, 0.8199816942214966, 0.012058554217219353, 0.04019518196582794, 0.014320476911962032, 0.0028640953823924065, 0.0028640953823924065, 0.00859228614717722, 0.9680641889572144, 0.0028640953823924065, 0.0028640953823924065, 0.9964714050292969, 0.9951683878898621, 0.9846921563148499, 0.9815204739570618, 0.015100314281880856, 0.9887402653694153, 0.00546265346929431, 0.9963047504425049, 0.9779507517814636, 0.991154134273529, 0.9977807402610779, 0.9985029101371765, 0.9853300452232361, 0.9930000305175781, 0.9810308218002319, 0.9901124238967896, 0.9971269965171814, 0.9915711879730225, 0.9950398802757263, 0.9956572651863098, 0.9956583976745605, 0.979048490524292, 0.9938209652900696, 0.987187385559082, 0.995832622051239, 0.9982059001922607, 0.9960083961486816, 0.9880802631378174, 0.9950727224349976, 0.980299711227417, 0.9871008992195129, 0.9991414546966553, 0.993221640586853, 0.9959827661514282, 0.9888882040977478, 0.9941611289978027, 0.9857600331306458, 0.9825838208198547, 0.9945584535598755, 0.9942675828933716, 0.9834483861923218, 0.9785487651824951, 0.9926636815071106, 0.981148898601532, 0.9920592904090881, 0.9975740313529968, 0.9923520684242249, 0.9877789616584778, 0.989841103553772, 0.9822055101394653, 0.9914284944534302, 0.9855790734291077, 0.9942847490310669, 0.9898823499679565, 0.9978838562965393, 0.9929524660110474, 0.9962449669837952, 0.9848927855491638, 0.990429162979126, 0.9836339354515076, 0.9936733245849609, 0.9984189867973328, 0.9877817034721375, 0.9882364273071289, 0.994286060333252, 0.9946966171264648, 0.9974725246429443, 0.9985108375549316, 0.9939128756523132, 0.9968205094337463, 0.9963905215263367, 0.984678328037262, 0.9879851937294006, 0.9925364255905151, 0.9971199035644531, 0.9937097430229187, 0.9983583688735962, 0.9981457591056824, 0.980173647403717, 0.977773129940033, 0.9835162162780762, 0.985856831073761, 0.9917171597480774, 0.9950467944145203, 0.9935078620910645, 0.9982285499572754, 0.9940928220748901, 0.9923375248908997, 0.9951727390289307, 0.9927845597267151, 0.9914940595626831, 0.9864054918289185, 0.9971444010734558, 0.9939388036727905, 0.9779946208000183, 0.9892129898071289, 0.010436474345624447, 0.010436474345624447, 0.9705921411514282, 0.9870502948760986, 0.99570232629776, 0.990870475769043, 0.997012734413147, 0.9954057335853577, 0.9970421195030212, 0.9880905151367188, 0.9944074749946594, 0.9995540380477905, 0.9672125577926636, 0.995940089225769, 0.9880617260932922, 0.997859001159668, 0.9958307147026062, 0.995537281036377, 0.9900814890861511, 0.9970251321792603, 0.9950182437896729, 0.9947132468223572, 0.9944086670875549, 0.9789685606956482, 0.9973008036613464, 0.9876208305358887, 0.9829619526863098, 0.9980036616325378, 0.994602620601654, 0.9867075085639954, 0.9924867749214172, 0.9906817674636841, 0.9865783452987671, 0.9939475059509277, 0.9922694563865662, 0.9780466556549072, 0.9922240972518921, 0.9881760478019714, 0.9986663460731506, 0.9863046407699585, 0.9880343079566956, 0.9881594777107239, 0.9867979288101196, 0.9960662722587585, 0.9952199459075928, 0.9965959191322327, 0.9946847558021545, 0.9877942204475403, 0.9795101284980774, 0.9935820698738098, 0.9896283149719238, 0.9949960708618164, 0.995922863483429, 0.9964553117752075, 0.9804775714874268, 0.990826427936554, 0.9961089491844177, 0.9965745806694031, 0.9842012524604797, 0.9957007765769958, 0.9958990812301636, 0.9845878481864929, 0.9906479716300964, 0.9986540675163269, 0.9955220222473145, 0.986472487449646, 0.9823650121688843, 0.9945610165596008, 0.9982606172561646, 0.9935418367385864, 0.989647388458252, 0.986723005771637, 0.9978382587432861, 0.9853420853614807, 0.9833277463912964, 0.9927055239677429], "Term": ["account", "air", "airport", "amit", "amit_shah", "angle", "anil", "april", "army", "arvind_kejriwal", "assam", "attack", "baby", "bagh", "bangladesh", "bank", "baton", "bihar", "bill", "bird", "bjp", "bjp_leader", "body", "bomb", "bottle", "box", "boy", "caa", "caa_protest", "campaign", "case", "cctv", "celebration", "chief_minister", "child", "china", "citizenship", "color", "comment", "communal", "communal_color", "community", "company", "congress", "context", "coro", "coro", "coro", "coro", "coro", "coro", "coro", "corona_virus", "corona_virus", "corona_virus", "corona_virus", "corona_virus", "corona_virus", "corona_virus", "coronavirus", "country", "crore", "czech", "czech", "data_verification", "data_verification", "datum", "daughter", "day", "death", "delhi", "delhi_police", "delhi_riot", "disease", "doctor", "drill", "drug", "election", "event", "evidence", "facebook", "family", "film", "fire", "flag", "food", "foot", "footage", "force", "game", "gandhi", "girl", "government", "group", "gujarat", "hand", "health", "hindus", "home", "hospital", "hotel", "house", "husband", "incident", "india", "indian_army", "infection", "information", "injury", "islam", "italy", "jamia", "jnu", "kashmir", "kejriwal", "khan", "kill", "kolkata", "kumar", "law", "leader", "lion", "list", "lockdown", "maharashtra", "man", "medium", "member", "message", "minister", "ministry", "mla", "mob", "mock", "mock_drill", "modi", "money", "mosque", "mother", "movie", "mumbai", "murder", "muslim", "muslim_man", "name", "nehru", "newspaper", "note", "nothing", "nrc", "number", "old_photo", "old_photos", "opposition", "order", "pack", "pack", "pack", "part", "party", "patient", "people", "performance", "person", "photograph", "photoshop", "police", "policeman", "post", "poster", "pradesh", "president", "prime_minister", "priyanka", "protest", "protester", "rahul_gandhi", "rally", "ram", "rape", "report", "republic", "riot", "road", "rss", "rumor", "run", "scene", "shah", "shaheen_bagh", "sharing", "show", "slogan", "social_media", "soldier", "sonia", "speech", "sri", "state", "statement", "stock", "stone", "story", "strike", "student", "suicide", "support", "temple", "time", "train", "trump", "tv", "tweet", "uttar", "vaccine", "verification", "victim", "violence", "virus", "vote", "water", "way", "wife", "woman", "worker", "world", "wrong", "year", "year_old", "yogi", "youth"]}, "R": 30, "lambda.step": 0.01, "plot.opts": {"xlab": "PC1", "ylab": "PC2"}, "topic.order": [1, 7, 4, 3, 2, 5, 6]};

function LDAvis_load_lib(url, callback){ var s = document.createElement('script'); s.src = url; s.async = true; s.onreadystatechange = s.onload = callback; s.onerror = function(){console.warn("failed to load library " + url);}; document.getElementsByTagName("head")[0].appendChild(s); }

if(typeof(LDAvis) !== "undefined"){ // already loaded: just create the visualization !function(LDAvis){ new LDAvis("#" + "ldavis_el202961124743444486412651638", ldavis_el202961124743444486412651638_data); }(LDAvis); }else if(typeof define === "function" && define.amd){ // require.js is available: use it to load d3/LDAvis require.config({paths: {d3: "https://cdnjs.cloudflare.com/ajax/libs/d3/3.5.5/d3.min"}}); require(["d3"], function(d3){ window.d3 = d3; LDAvis_load_lib("https://cdn.rawgit.com/bmabey/pyLDAvis/files/ldavis.v1.0.0.js", function(){ new LDAvis("#" + "ldavis_el202961124743444486412651638", ldavis_el202961124743444486412651638_data); }); }); }else{ // require.js not available: dynamically load d3 & LDAvis LDAvis_load_lib("https://cdnjs.cloudflare.com/ajax/libs/d3/3.5.5/d3.min.js", function(){ LDAvis_load_lib("https://cdn.rawgit.com/bmabey/pyLDAvis/files/ldavis.v1.0.0.js", function(){ new LDAvis("#" + "ldavis_el202961124743444486412651638", ldavis_el202961124743444486412651638_data); }) }); }

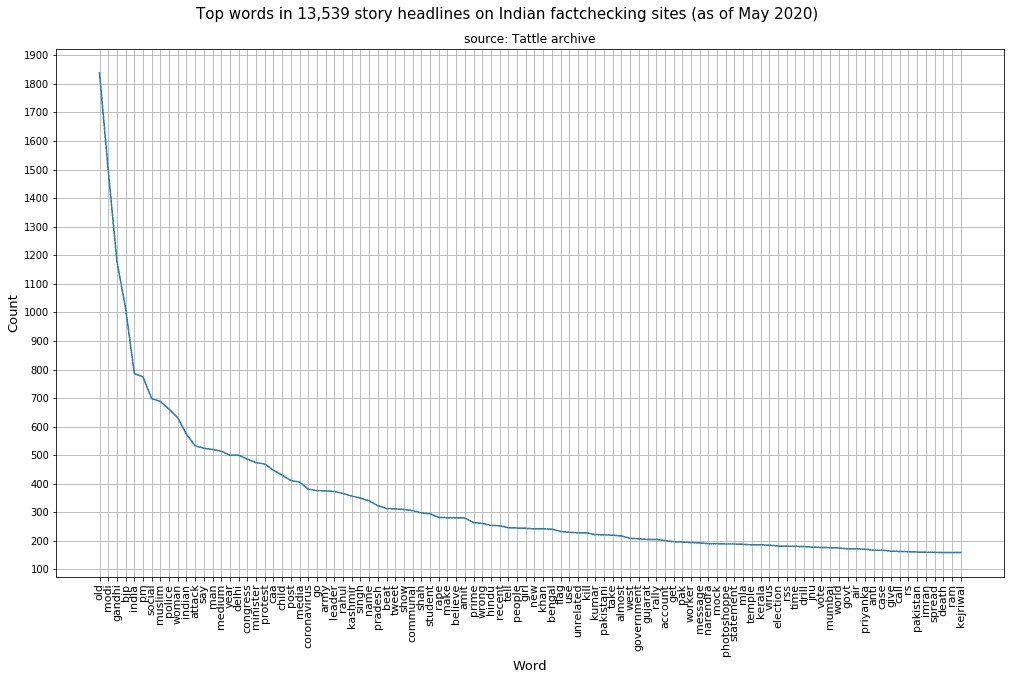

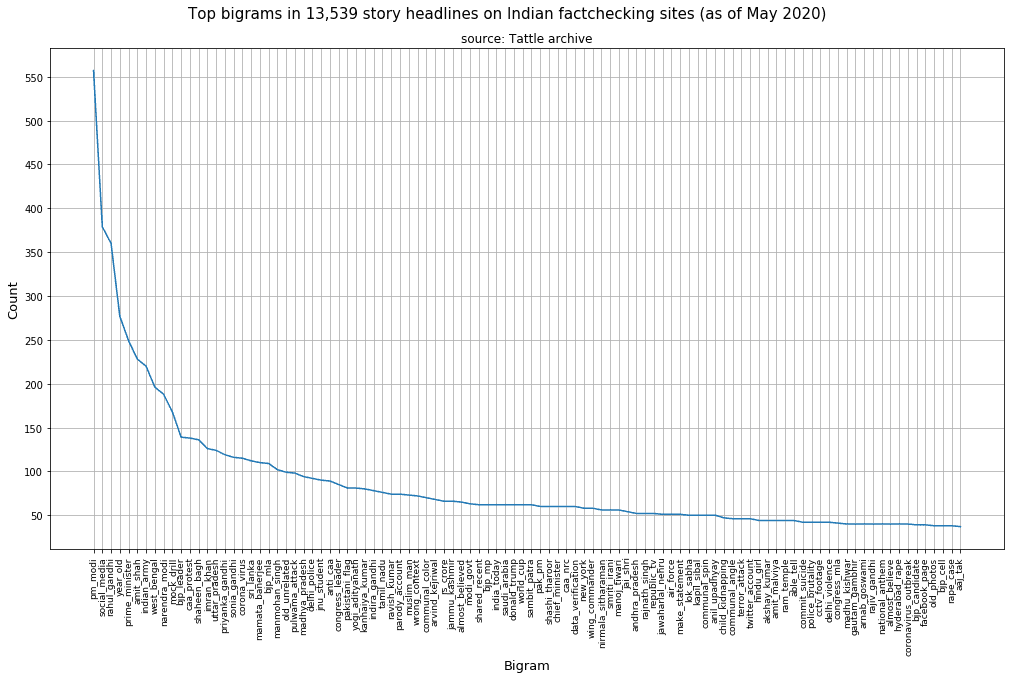

10. N-gram analysis of headlines

The n-gram analysis involves a simple word frequency count (after removing stop words and domain words) to reveal the top words and word-pairs (bigrams) in the corpus. Bigrams are insightful because they capture more information than unigrams.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

# Redoing text processing with adjectives, verbs and adverbs included

def lemmatization(docs, allowed_postags=["NOUN", "PROPN", "VERB", "ADJ", "ADV"]):

nlp = spacy.load("en_core_web_sm")

docs_out = []

for sent in docs:

doc = nlp(" ".join(sent))

docs_out.append([token.lemma_ for token in doc if token.pos_ in allowed_postags])

return docs_out

data_lemmatized = lemmatization(data_with_bigrams, allowed_postags=["NOUN", "PROPN", "VERB", "ADJ", "ADV"])

# Removing any stopwords created because of lemmatization

data_cleaned = [[word for word in doc if word not in stop_words] for doc in data_lemmatized]

# Plot frequency distribution of most common words in the headlines

all_sents = [" ".join(doc) for doc in data_cleaned]

tokenizer = regextoken("[a-zA-Z0-9]+")

all_words = tokenizer.tokenize(str(all_sents))

fd = FreqDist()

for word in all_words:

fd[word] += 1

# Plotting the top 50 most frequent words

# plt.figure(figsize = (15, 10))

# fd.plot(50)

# plt.show()

fd_sorted={k: v for k, v in sorted(fd.items(), key=lambda item: item[1], reverse=True)}

fd_sorted=dict(list(fd_sorted.items())[0: 100])

plt.figure(figsize = (15, 10))

plt.suptitle("Top words in 13,539 story headlines on Indian factchecking sites (as of May 2020)", fontsize= 15)

plt.title("source: Tattle archive", fontdict = {'fontsize': 12})

plt.xlabel(xlabel = "Word", fontdict = {'fontsize': 13})

plt.ylabel(ylabel = "Count", fontdict = {'fontsize': 13})

plt.xticks(rotation=90, size =11)

plt.yticks(np.arange(0, 2000, 100))

plt.plot(fd_sorted.keys(), fd_sorted.values())

plt.grid()

plt.tight_layout(pad=4)

plt.savefig("word_plot.png", bbox_inches='tight')

plt.show()1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

# # Plot frequency distribution of most common word-pairs in the headlines

bigrams_list=[]

for idx in range(len(data_cleaned)):

for token in bigram[data_cleaned[idx]]:

if '_' in token:

# If token is bigram, add it to document

bigrams_list.append(token)

fd = FreqDist()

for b in bigrams_list:

fd[b] += 1

fd_sorted={k: v for k, v in sorted(fd.items(), key=lambda item: item[1], reverse=True)}

fd_sorted=dict(list(fd_sorted.items())[0: 100])

plt.figure(figsize = (15, 10))

plt.suptitle("Top bigrams in 13,539 story headlines on Indian factchecking sites (as of May 2020)", fontsize= 15)

plt.title("source: Tattle archive", fontdict = {'fontsize': 12})

plt.xlabel(xlabel = "Bigram", fontdict = {'fontsize': 13})

plt.ylabel(ylabel = "Count", fontdict = {'fontsize': 13})

plt.xticks(rotation=90, size =9)

plt.yticks(np.arange(0, 800, 50))

plt.plot(fd_sorted.keys(), fd_sorted.values())

plt.grid()

plt.tight_layout(pad=4)

plt.savefig("bigram_plot.png", bbox_inches='tight')

plt.show()11. Applications

We have performed topic modelling and ngram analysis on 13,000+ fact-checking story headlines, including headlines that were originally in Indian languages. Some possible uses and applications of this analysis are:

- As a starting point for**research and solutions** surrounding misinformation in India

- For content curation i.e. targeting content categories aligned with the produced topics / words while scraping social media for misinformation

- To prioritise relevant content while archiving using the top words from the topic model and ngram analysis as filters

- Using some of these topics as tags / labels when annotating potential misinformation, eg. on the Tattle annotation UI